![]()

![]()

The cover of the newspaper El Mundo last Tuesday, April 4, caused a stir in the journalistic world and triggered the big question: With artificial intelligence, will it be possible to distinguish lies from reality? This approach also leads us to rethink the use of this new technology that burst into the online world with force. Although there are many productive uses, it is also new dangers appear due to malicious use of its incredible accuracy, from generating academic papers to spoofing and pornographic content.

A cover made with AI to warn about its risks

If you have not yet seen the cover of El Mundo to which we refer, it is the image of Pablo Iglesiasformer leader of Podemos, and Yolanda Diaz, second vice president, Minister of Labor and leader of Sumar, in a photo where they are seen hugging from behind. The fake photo was created by the United Unknown group -a group of video editors that have dedicated itself in recent years to political satire-, using various AI tools such as DALL E 2, Midjourney and Stable Diffusion.

The image of Pablo Iglesias and Yolanda Díaz on the cover of the newspaper El Mundo

The purpose of the cover was draw public attention to read a report from the journalist Rodrigo Terrasa in which the risks of AI are discussed. But the impact was greater than expected and the strategy was broadcast all over Spanish television. And it is that the different AI uses in the last months they turned on the alarms among technology experts, educational entities and the media.

The great challenge of artificial intelligence: “Soon it will be impossible to tell the truth from the lie”

Brutal report by @rterrasa with images by @unitedunknownhttps://t.co/UNHSAXka9O pic.twitter.com/TDAZNdyWYl

– Gonzalo Suárez (@gonzalosuarez) April 4, 2023

5 examples of misuse of AI

AI is a very powerful tool that has the potential to transform many aspects of society. However, as with any technology, there is also the risk that it is used inappropriately or malicious. We then collect 5 examples of malicious uses of artificial intelligence:

1. Students from schools and universities delegate tasks in the GPT Chat

Concern in the education sector does not go unnoticed. Teachers are alarmed by the use that students give to AI, delegating them the preparation of academic works such as essays or projects. While AI can be a useful tool for research and data analysis, its misuse could be considered plagiarism or academic fraud.

An example of this is the case reported by the media motherboardin which a biochemistry student who identifies as @innovate_rye on Reddit, he explained that some AI tools are useful for doing simple tasks that require long answers. He in turn, told that he used an AI to carry out a university assignment in which he was asked to name 5 good and bad things about biotechnology.

“I would send the AI a question like ‘what are the five good and bad things about biotechnology?’ and she generated a response that gave me an A. I still do my homework on the things I need to learn to pass, I just use the AI to take care of the things I don’t want to do or that seem insignificant to me,” the student said.

The only solution, for the moment that educational institutions have found, is to regulate the use of AI for students. Some colleges and universities around the world, including The United States, Australia, India, France and Spain have banned ChatGPT considering its use by students illegitimate.

In NY Banned from using ChatGPT in all public schools. On the other hand, The Guardian, exposed that the eight main Australian universities, associated in the so-called Group of Eight (Group of Eight), have been seen forced to modify their evaluation methods to prevent their students from using AI to get straight A’s.

2. Fake images of politicians and celebrities to make up news

One of the most famous cases was (and still is) the manipulated images of Pope Francis in various modern outfits. The most popular was one in which he was seen wearing a Balenciaga-style coat, which went around the networks several times before it was discovered. that the work was the result of an AI.

The images of the president of France, Emmanuel Macron in the middle of a demonstration, and former US president Donald Trump being arrested, both situations that have never happened, also became famous.

The Pope, Emmanuel Macron and Donald Trump, protagonists of images created with AI. Source: RTV.es

Even the AI tool midjourney decided to discontinue its free version from the proliferation of these images.

Also, social networks like Instagram and TikTok will begin to indicate which of the content that is posted is generated by AIprecisely to avoid that a false scenario can be passed as a true one.

3. Deepfakes to make pornographic content

Deepfakes is an AI-based technique in which, through algorithms, it creates false content such as videos that appear to show real people saying or doing things they never actually said or did. This is accomplished by overlaying a person’s face on an existing video, which can be of a different person or an event that never happened.

Although deepfakes can be applied in a positive way (for example, in the production of audiovisual content for entertainment or the creation of digital models for the film industry), it can also be used to spread misinformation or deceive people from deliberate way. For example, creating pornographic content of public figures.

This practice has already affected personalities such as Scarlett Johansson, Emma Watson, Taylor Swift, Gal Gadot and Maisie Williams. Cases of some content creators such as Sweet Anitathe UK streamer with more than 1.9 million followers on Twitch, who was the victim of the image of her face being used to create pornographic content that is offered on the network.

Fake image of actress Scarlett Johansson with AI

4. Impersonation for theft and fraud

AI has also opened up the possibilities for some people to commit phishing. With the deepfake technology that we mentioned above, it can be possible to impersonate identities due to the great detail that is achieved when generating images. Criminals can use deepfakes to create fake profiles on social media, do phishing or perform cyber attacks.

A real case of this risk is that of a british company who received a call who was supposedly the CEO of the company, ordering the transfer of almost 240,000 dollars (220,000 euros) to a Hungarian bank. The call was credible because the voice was identical to the CEOsomething that was achieved through an analysis of hours made by an AI, which was dedicated to studying the person’s voice recordings in detail in order to replicate it perfectly.

Physical traits can also be recreated. According to research carried out by the Blavatnik School of Informatics and the School of Electrical Engineering, only a few 9 AI-generated faces observing the most common patterns of large image banks enough to impersonate between 42% and 64% of people. “Face-based authentication is extremely vulnerable, even if there is no information about the identity of the target,” the report states.

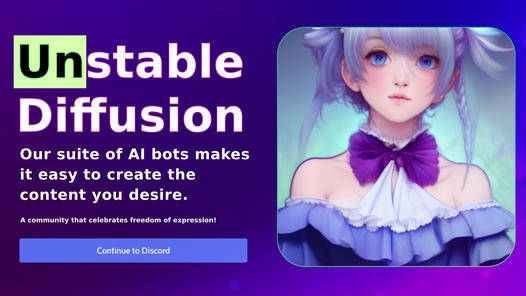

5. Creation of inappropriate content

Finally, one of the most harmful uses of AI is the production of inappropriate content such as images and videos that contain violence, pornography or violate the lawsomething that can cause serious psychological damage to users or be used for bullying and extortion online.

An example of how AI is being used to generate this content is Unstable Diffusion, a channel of discord that uses AI and which several users use to generate pornography, with the help of trained bots and with hundreds of thousands of images. Interestingly, the tool was originally created to generate artistic images, but soon its use was distorted.

Unstable Diffusion is currently divided into several channels, each catering to a different sexual preference or fetish. Some are aimed only at men, others only at women, trans people, etc. However, some can be very unusual and even disturbing, such as non-human sex, horror scenarios, etc.

Another case that aroused controversy due to lensa, an application that went viral due to the generation of avatars based on photographs. Generally, when the images correspond to men, the results show warriors, heroes or politicians; but when they are women there is a clear bias towards creating avatars of nude models. Melissa Heikkilaa journalist specializing in AI for Technology Review, accounted for the phenomenon that occurred with Lensa and determined that Of 100 avatars generated with her face, 16 were nude and 14 had tight, suggestive clothing.

Images created by Lensa

On the other hand, a study conducted by the Johns Hopkins University and Georgia Institute of Technology, showed that an AI system can develop racist and sexist biases. This was evidenced after the scientists provided images of different people to AI robots and asked them to identify them according to the terms they were given. When asked to point to a criminal they selected one black personand in the case of the women was selected when they placed the term housewife.

Do you know of any other cases of AI misuse? We read you 👇

Photo: Depositphotos

Stay informed of the most relevant news on our Telegram channel

![Ecommtech 2025 guide: The best technology to climb your digital business [Ebook]](https://www.logitechgamingsoftware.co/wp-content/uploads/2025/06/ECOMMTECH-2025-articulo-1200x720-1-336x220.jpg)